DraGan

Image Generation

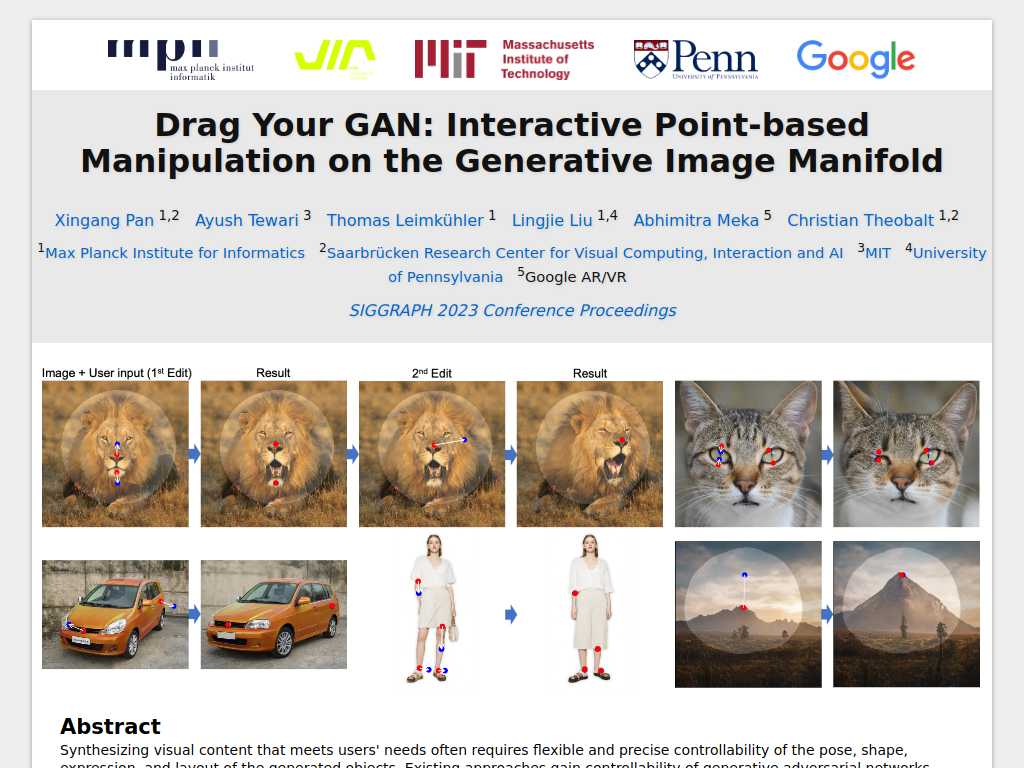

Interactive Point-based Manipulation on the Generative Image Manifold

Average rated: 5.00/5 with 14 ratings

Favorited 67 times

Rate this tool

About DraGan

DragGAN is an innovative tool that provides precise control over image manipulation by allowing users to 'drag' points in an image to desired positions. This enables flexible adjustments in pose, shape, expression, and layout of various objects such as animals, cars, and landscapes. Its feature-based motion supervision combined with a new point-tracking approach ensures realistic outputs even in complex scenarios. This functionality is especially valuable for creative professionals, researchers, and anyone needing high precision in visual content creation.

Key Features

- Interactive point dragging

- Feature-based motion supervision

- New point tracking approach

- Realistic image generation

- Wide range of applications

- Precise image control

- GAN inversion for real images

- Handles challenging scenarios

- Applicable to various categories

- Qualitative and quantitative superiority

Tags

GANimage manipulationpoint trackingfeature-based motion supervision

FAQs

What is DragGAN?

DragGAN is an interactive tool for manipulating generated images by dragging points to specific target positions, allowing for precise control over pose, shape, expression, and layout.

How does DragGAN differ from existing GAN manipulation methods?

Unlike existing methods that rely on manually annotated training data or prior 3D models, DragGAN offers flexible and precise control by allowing users to drag points directly on the image.

What are the key components of DragGAN?

The key components of DragGAN include feature-based motion supervision and a new point tracking approach to keep localizing the handle points.

What categories can DragGAN be applied to?

DragGAN can be applied to a wide range of categories, including animals, cars, humans, landscapes, and more.

Can DragGAN handle challenging scenarios?

Yes, DragGAN can produce realistic outputs even in challenging scenarios such as hallucinating occluded content and deforming shapes that follow the object's rigidity.

How does DragGAN achieve realistic results?

DragGAN operates on the learned generative image manifold of a GAN, enabling it to produce realistic images through precise manipulation techniques.

Is DragGAN suitable for real image manipulation?

Yes, DragGAN can also be used for manipulating real images through a process known as GAN inversion.

Who developed DragGAN?

DragGAN was developed by Xingang Pan, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, and Christian Theobalt, affiliated with institutions like the Max Planck Institute for Informatics and others.

In what conference was DragGAN introduced?

DragGAN was introduced in the ACM SIGGRAPH 2023 Conference Proceedings.

Where can I find more information on DragGAN?

More information on DragGAN can be found on their project webpage, where you can also find downloads for the paper and code.