BenchLLM

AI Assistant

Revolutionize Your LLM App Evaluation with BenchLLM

Average rated: 0.00/5 with 0 ratings

Favorited 0 times

Rate this tool

About BenchLLM

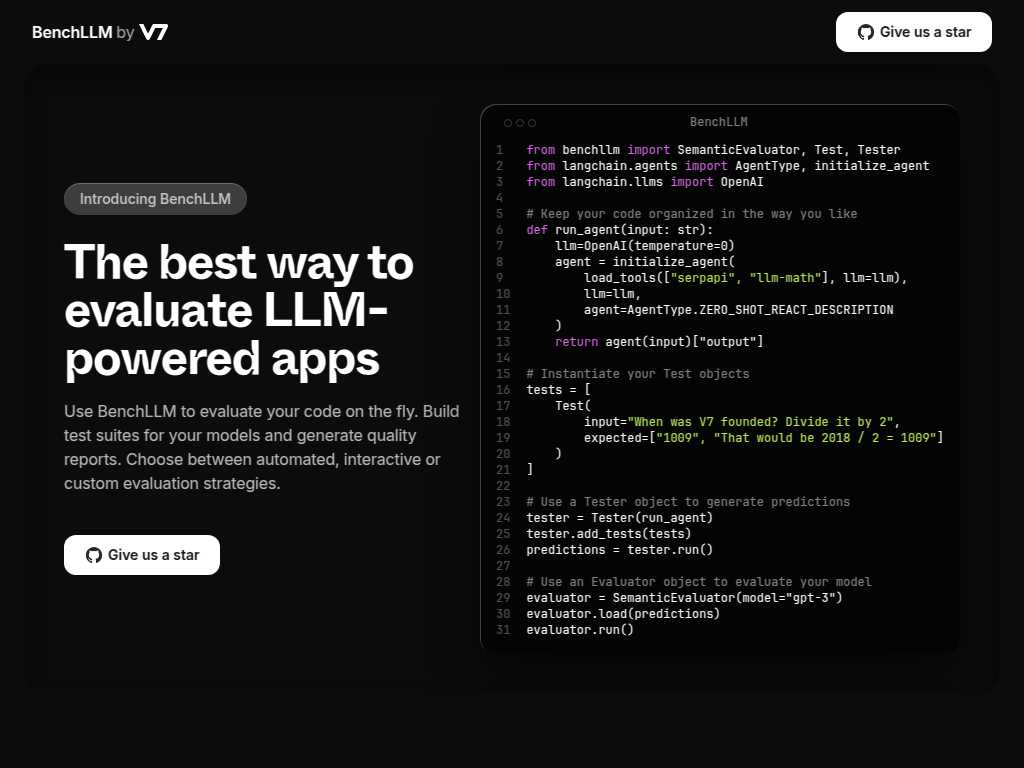

BenchLLM, developed and maintained by V7, is a revolutionary tool designed to revolutionize the way developers evaluate Language Learning Models (LLMs). With its state-of-the-art features, BenchLLM offers an unparalleled experience, making it easier and more efficient than ever to evaluate your LLM-powered apps. Whether you are a developer aiming to quickly evaluate code on the fly, create comprehensive test suites, or generate detailed quality reports, BenchLLM is equipped to meet all your needs. Its support for automated, interactive, or custom evaluation strategies ensures flexibility and adaptability to various testing environments. BenchLLM stands out for its compatibility with leading APIs such as OpenAI and Langchain, making it incredibly versatile and user-friendly. Its flexible API supports intuitive test definition in JSON or YAML formats, allowing for organized tests into suites and the generation of insightful evaluation reports. This ease of use extends to its integration into CI/CD pipelines, facilitating continuous monitoring of model performance and swift detection of regressions in production environments. Through its elegant CLI commands, evaluating model performance has never been simpler or more reliable. For those looking to elevate their LLM app evaluation process, getting started with BenchLLM is as simple as downloading and installing the tool. Developed with community input in mind, users are encouraged to share feedback, ideas, and contributions, fostering an environment of continuous improvement. Whether you're looking to streamline your evaluation process, ensure the high quality of your models, or simply harness the power of LLMs more efficiently, BenchLLM is your go-to solution. Its blend of flexibility, ease of use, and comprehensive feature set makes it the best way to evaluate LLM-powered apps.

Key Features

- Automated, interactive, and custom evaluation strategies

- Flexible API support for OpenAI, Langchain, and any other APIs

- Easy installation and getting started process

- Integration capabilities with CI/CD pipelines for continuous monitoring

- Comprehensive support for test suite building and quality report generation

- Intuitive test definition in JSON or YAML formats

- Effective for monitoring model performance and detecting regressions

- Developed and maintained by V7

- Encourages community feedback, ideas, and contributions

- Designed with usability and developer experience in mind